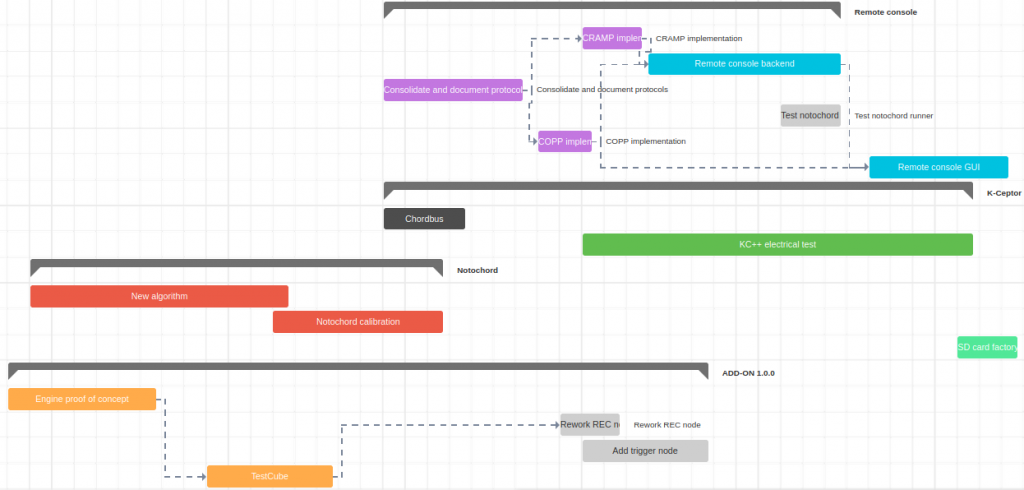

Many changes have occurred in the Chordata framework lately, and many will happen in order to achieve the complete and flexible motion capture solution we envision.

An initial milestone involved raising the funds through our first Kickstarter campaign, which we have just successfully completed! These funds will allow us to create and produce the first production version hardware. Another important aspect of this system is software, and since this framework relies on many software pieces that interact to obtain the capture is easy for our users to lose track of all of them. That’s why we’ve been working on a revised plan that will allow everyone to have a clear view of the changes to come.

Of course, roadmaps in software (and in particular those of open-source projects) are living documents. Things might change with time, redistribution of resources and -more importantly- your feedback, so please let us know your ideas using this thread of our forum. We are also continuously collecting feedback on all our communication channels.

KCeptor++ embedded development

August-September 2020

The new KCeptor will include an ARM microprocessor which will pre-process the data collected from the sensors before sending it to the Notochord. In the first phase, we will develop the software that will run inside this microcontroller.

Notochord 1.0.0 as python module

October-December 2020

This module will be based on the current core of the system: the Notochord program. It is a C++ application that takes care of all the low-level operations like managing and reading the sensors. The C++ core will be wrapped in a python interface and used to recreate a new default version of the notochord program. The module will then allow advanced users to tweak the behavior of the default Notochord, or even rewrite it from scratch.

Milestone: Consolidation of the Chordata public control API

End of 2020

Apart from the low-level python API the new notochord module will expose, at this point, the remote communications components of the Chordata Motion framework will be completed. These components are the Chordata Motion remote action management protocol (CRAMP) and the Chordata Motion open pose protocol (COPP). You can read more about them in this article, in a nutshell, they basically allow any remote Chordata Motion component to completely control the capture, without requiring any manual user intervention.

Remote console

January – April 2021

This software component is partially done, but has been sitting for a while waiting for the consolidation of the remote protocols. It is a progressive web app that will allow users to capture from any device instantaneously, without a required installation. During this period we will make many changes to the existing codebase, including enhancements on the user experience and implementation of the new protocols.

External integrations

March – August 2021

At this point, we will have a robust, flexible framework capable of sharing capture data with any other software. It will be time for us to write SDKs to make the task of integrating the Chordata Motion captures into many popular platforms easier. The plan is to start with the three main videogame engines: Godot, Unreal, and Unity. We will keep collecting feedback about which integrations to prioritize. Many members of our community are working on their own integrations right now, so some of these might be available as contributed components before our team even starts working on them.

Translation in space, overall heading correction, etc

April – September 2021

Until now our main focus was to create a solid and flexible inertial mocap platform, in order to do it we started by implementing only the core functionality of the inertial mocap: inner pose capture.

Of course once the core features are stable, a complete mocap system needs some derived functionality. By far the most popular of these features is the ability of the system to capture the spatial translation of the performer. At this stage we will implement this one and other features, like for example the overall heading correction for the pose-calibration which will allow the performer to stand on any given direction while calibrating.